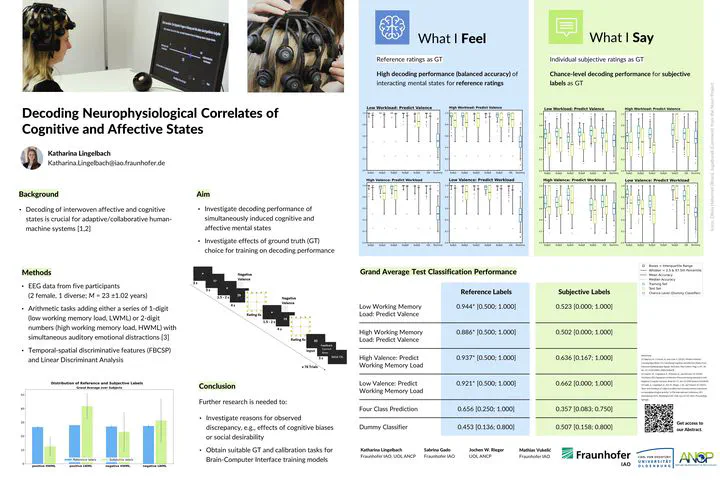

What I feel and what I say: Decoding neurophysiological correlates of cognitive and affective states

Jul 1, 2021· ,,,·

0 min read

,,,·

0 min read

M.Sc. Katharina Lingelbach

Sabrina Gado

Jochem W. Rieger

Mathias Vukelić

Poster on the NEC 21

Poster on the NEC 21Abstract

For neuroergonomic applications, robust decoding of activation patterns indicating current affective or cognitive states is a crucial step to develop adaptive and collaborative human-machine systems leading to increased performance, safety, and user experience [1-6]. So far, most studies attempting to estimate affective and cognitive states from electroencephalographic (EEG) signals used strictly controlled stimuli and tasks to induce a single, isolated mental state. Classification results by machine learning models were promising when estimating workload but rather modest to poor when estimating affective-emotional states [4]. However, in naturalistic environments, we are seldom confronted with isolated stimuli resulting in one mental state but rather experience a composition of interwoven affective and cognitive states in response to complex stimuli. Especially in naturalistic applications, choosing a good approximation to the ground truth that adequately represents a person’s true mental states is decisive step when training decoding models. The ground truth can either be estimated based on characteristics of the experimental condition (i.e., hypothetically estimated) or by asking participants to provide labels based on their experiences via post-hoc ratings or questionnaires. In this study, we investigate decoding performance of simultaneously induced cognitive and affective mental states with a filter-bank common spatial pattern (FBCSP) and linear discriminant analysis (LDA) approach [4,7]. In addition, we are interested how decoding performance changes when using subjectively rated labels instead of the experimentally induced labels.

Type

Publication

3rd Neuroergonomics Conference 2021, Munich